Step aside, outdated security measures – there’s a new player in town, and it’s called deepfake fraud. Gone are the days of stolen credit cards and hacked passwords; these cyber villains are harnessing the power of artificial intelligence to clone you. By leveraging artificial intelligence, deepfakes enable fraudsters to clone your face, voice, and mannerisms to steal your identity. Just ask the Hong Kong employee who fell victim to a $25 million deepfake scam. In 2023, attempts to bypass remote identity verification using deepfake-driven “face swaps” surged by 704%. Continuing the trend, from 2023 to 2024, deepfake-driven “face swap” attacks attacks rose by 300%.

Our increasing reliance on digital services for everything from banking to healthcare to government benefits demands a secure and trustworthy way to verify our identities online. Traditional methods like usernames, passwords, and even multifactor authentication are becoming vulnerable against AI-driven threats. Enter ID.me – the ultimate guardian of your digital identity. ID.me’s cutting-edge omnichannel verification solution is leading the charge against digital deception, ensuring your identity stays safe and secure online. Let’s dive into the trenches of digital identity verification and witness firsthand how ID.me protects your online presence.

The Evolution of Digital Identity Verification

First, some history. The foundation for digital identity was laid in the early days of the Internet with the introduction of usernames and passwords. Biometric digital identity verification has since become popular in the consumer market in the 2010s, with smartphone manufacturers deploying fingerprint sensors and facial scanners in newer phones to allow users to unlock their devices quickly and securely.

The application of digital identity verification for access to government agencies, however, requires higher standards for proof of identity than many consumer use cases. This is why the National Institute of Standards and Technology (NIST), an agency of the U.S. Department of Commerce, introduced the NIST Identity Assurance Levels (IAL) as a key component of the NIST Digital Identity Guidelines, NIST 800-63-3. To verify one’s identity remotely for accessing public benefits today, many state and federal agencies have sought an identity verification solution certified at the requisite IAL for that specific use case.

How ID.me Is Countering Emerging Threats

As a result of the rising deepfake threat, the National Security Agency, Federal Bureau of Investigation, and Cybersecurity and Infrastructure Security Agency released the joint Cybersecurity Information Sheet “Contextualizing Deepfake Threats to Organizations” to help organizations identify, defend against, and respond to deepfakes.

To combat the evolving threat of new pathways for fraudsters to exploit, ID.me offers an advanced digital identity verification solution that organizations can trust to prevent fraud and protect sensitive digital information. ID.me’s solution meets the federal standards for consumer authentication set by NIST and is approved as a NIST 800-63-3 IAL2 / AAL2 credential service provider by the Kantara Initiative.

ID.me employs a multi-faceted strategy to counteract fraudulent attempts, including deepfakes, which is critical in the context of securing public benefits access. This approach aligns with NIST guidelines and incorporates industry best practices through four primary phases: before, during, and after verification, and continuous threat research and monitoring.

1. Before Verification:

ID.me implements a robust bot mitigation program to shield partners from advanced automated threats that simulate human interactions. This defense system includes various security measures such as endpoint protection, SIEM systems, perimeter firewalls, web application firewalls, and threat intelligence feeds. For instance, ID.me successfully countered a global botnet assault by integrating reCAPTCHA checks and JavaScript challenges, enhancing the resiliency of its platform against mass unauthorized attempts, thereby mitigating risks before they escalate to fraud attempts, including deepfake incursions.

2. During Verification:

The ID.me verification process addresses potential fraud by distinguishing between first-party, second-party, and third-party fraud types. It uses a comprehensive suite of tools:

- Document Verification and Synthetic Identity Detection: Uses machine learning and historical data analysis to detect inconsistencies in government IDs and synthetic identities, preventing unauthorized access before it occurs.

- Liveness Detection and 1:1 Matching: Collaborates with companies like iProov and Paravision to ensure that the individual presenting themselves online is genuine and not a deepfake. This technology, known as Presentation Attack Detection, uses advanced neural networks and Generative Adversarial Networks (GAN) to differentiate between real human traits and artificial replicas.

- Contextual Clues and SMS Consent Messaging: Aids in the identification and prevention of social engineering attempts, thereby indirectly protecting against fraud in which deepfakes may be used to manipulate victims.

3. After Verification:

Post-verification, ID.me maintains an industry-leading low false-positive rate in fraud identification through the use of AI and machine learning (ML) models coupled with human review and supervision. These systems are trained on vast datasets, enabling them to recognize the nuances of social engineering and third-party fraud. They use decision trees, clustering algorithms, and anomaly detection to flag unusual activities, effectively recognizing patterns indicative of deepfake manipulations or other fraudulent actions.

4. Continuous Threat Research and Monitoring:

ID.me’s continuous engagement in threat intelligence programs enables the company to stay ahead of emerging fraud techniques, including deepfake technologies. By monitoring various platforms including the darkweb and I2P, leveraging insights into new threats, ID.me can adapt its defenses in real time, integrating these findings into its fraud detection mechanisms.

ID.me’s technological defenses against deepfakes include:

- GANs and Neural Networks: Used in document verification and liveness detection to discern genuine human characteristics from those artificially generated.

- Machine Learning Models: Analyze behavior over time, detecting anomalies that could indicate synthetic identity fraud or manipulated documents.

- Presentation Attack Detection: Specifically designed to thwart deepfakes and other sophisticated spoofing attempts by verifying the physical presence of a live person.

- Continuous Learning: By constantly updating its models with new data, ID.me ensures its systems remain effective against the latest deepfake techniques.

ID.me’s approach to combating fraud, including the increasingly sophisticated area of deepfakes, is comprehensive and multifaceted. By integrating cutting-edge AI and ML technologies, conducting ongoing threat analysis, and employing rigorous verification procedures, ID.me maintains a robust defense system that aligns with the highest industry standards. This not only protects individuals’ identities but also preserves the integrity of the systems and benefits they seek to access.

ID.me in Action

Fraud threats reached a peak at the onset of the COVID-19 pandemic. With millions out of work, state unemployment agencies saw skyrocketing numbers of unemployment benefits claims—many of them fraudulent. For example, the state of Georgia saw 5 million unemployment claims in a short period during the pandemic, although the state only had approximately 10.5 million residents at the time.

Thanks to ID.me’s advanced fraud prevention capabilities, Georgia prevented an estimated $10 billion in fraudulent payments. Over the course of the pandemic, seven states credited ID.me with helping to prevent over $273 billion in fraud.

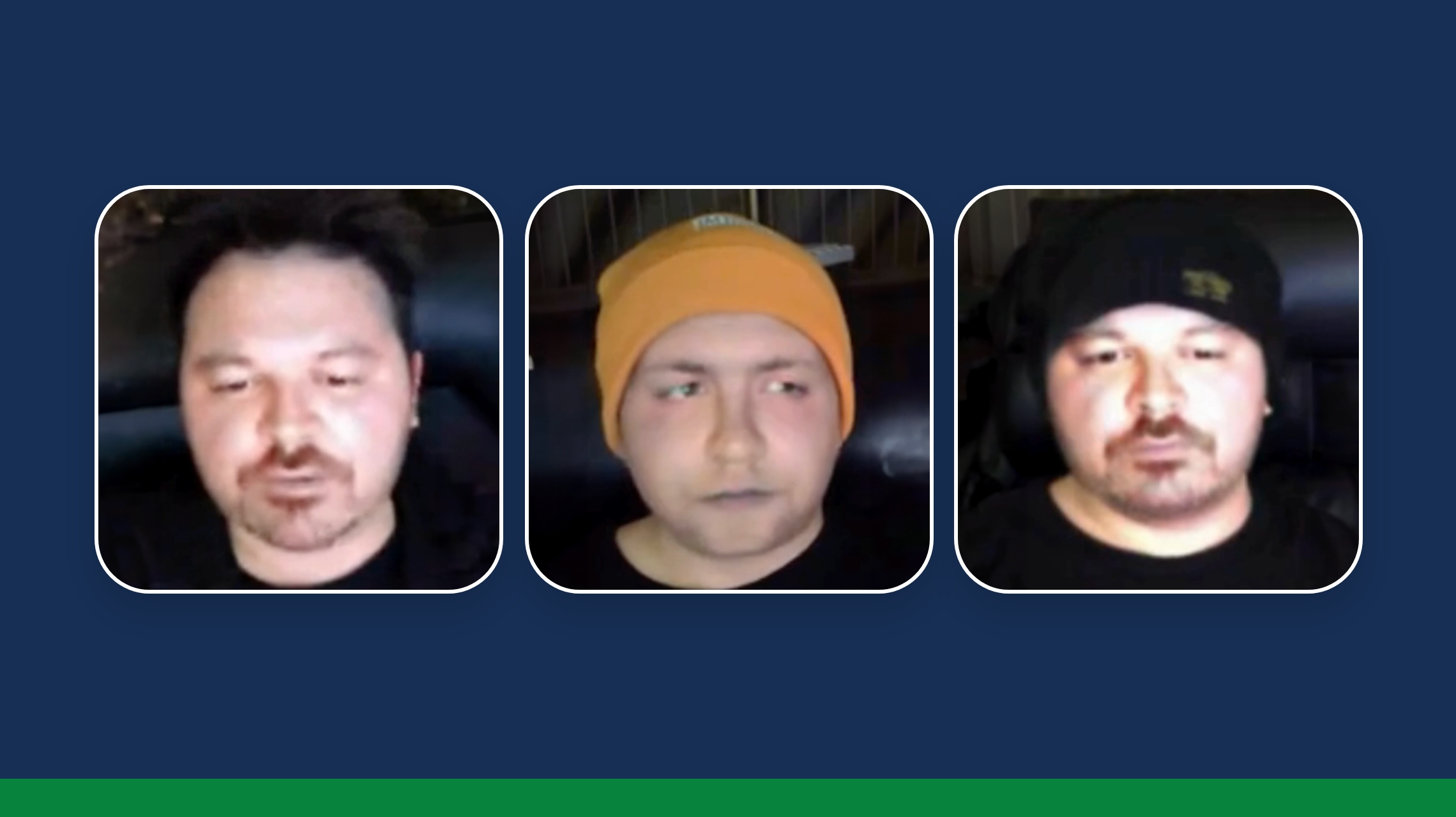

The answer: All three are deepfakes.

The Future of Identity Verification

The emergence of generative AI presents a double-edged sword in the context of identity theft and digital transactions. On one hand, these advanced algorithms enhance security protocols, improve verification processes, and aid in the detection of fraud. On the other hand, they furnish malicious actors with sophisticated tools for creating highly convincing deepfakes, synthetic identities, and counterfeit documents. As generative AI technologies evolve, we can anticipate an increase in the complexity and believability of these fraudulent constructs, making traditional detection methods less effective. This escalation could lead to an arms race between fraudsters using generative AI to create more sophisticated impersonation and fabrication tactics and defenders leveraging the same technology to counteract these threats.

In response to this evolving landscape, future defenses against AI-generated fraud will likely incorporate more dynamic, adaptive AI systems capable of learning and reacting to new fraudulent patterns in real time. These systems may utilize advanced machine learning techniques, such as unsupervised learning and reinforcement learning, to analyze vast datasets and identify subtle anomalies indicative of AI-generated fraud. Additionally, we may see a shift toward biometric and behavioral analysis, further integrating human elements that are challenging for AI to replicate perfectly. This approach, combined with the continued development of ethical AI frameworks and regulations, aims to maintain a balance between innovation and security, ensuring that digital identities and transactions remain protected in an increasingly AI-driven world.

Secure identity verification plays a critical role in combating fraud. That is why ID.me is built on a foundation of fraud prevention and security to offer organizations and users a reliable solution that they can trust. As the “identity layer of the Internet,” ID.me’s commitment to innovation and robust fraud prevention and security measures empowers users to confidently navigate the digital world, while safeguarding businesses and institutions from fraudulent activity. Together, we can ensure that the digital world remains a safe and secure space for everyone.

JT Taylor is ID.me’s Senior Director of Fraud Investigations and Operations.